Writing constant time code is hard. We all know that. But I’m always amazed again on how difficult it is. In preparation for making NSS more constant time I looked into certain CPU instructions that are known to be not constant time. So I wrote a little thing to measure the time (CPU cycles) needed for division.

div rcx ; eax is now a/b

The CPU I’m using in this post is an Intel i7-4790 (haswell). Running the same measurements on other processors architectures will most likely yield different results. I’m describing two experiments I did. First, I look at bits in the divisor and how they influence the timing of the division. And then I look at the dividend and its influence.

The Divisor

There are two interesting things we can look at here. The very small divisors as well as the pattern that we get in larger divisors.

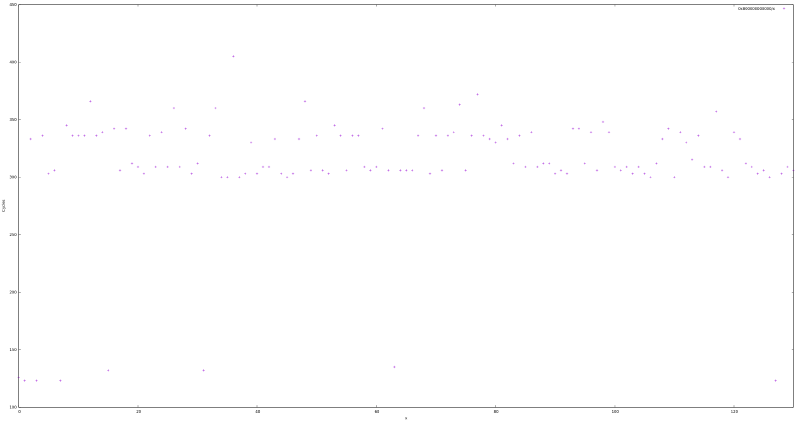

< 129

This graph plots the number of cycles needed for the division of numbers < 130. It’s easy to see that the number of cycles needed is in general around 300. However, there are a couple of “outliers” that need significantly less.

128 123

64 135

32 132

16 132

8 123

4 123

2 123

1 126

You might have spotted the pattern already. The CPU probably doesn’t want to do this expensive division and simply shifts the dividend to get the result. This trend continues later on but is more difficult to observe. (And there’s a more interesting pattern there.)

The larger pattern

The graph shows a striking pattern and makes it obvious that division is not constant-time. Let’s try to understand what’s happening here.

The first part of the pattern can be explained by changing bit-lengths in the divisor. At 8191 and 16383 for example a streak of fast division ends. This is probably because the 8192 and 16384 require an additional bit each.

However, I’m clueless on how to explain the rest of the pattern.

(I’ll update this post when I figured out what’s going on.)